Building a Secure and Scalable Multi-Tenancy Model on GKE

.jpg)

As engineering organizations scale, managing infrastructure for dozens of teams becomes increasingly complex. Each team needs its own environment for development, QA, staging, and production. When every team operates its own Kubernetes cluster, operations quickly become fragmented, expensive, and difficult to govern.

At LiveRamp, we evolved our infrastructure by implementing a comprehensive multi-tenant Kubernetes (MTTN) cluster system that supports multiple teams and workloads efficiently. Here’s a look into our journey transforming dedicated cluster architectures into a shared, cost-effective, and highly scalable multi-tenancy solution.

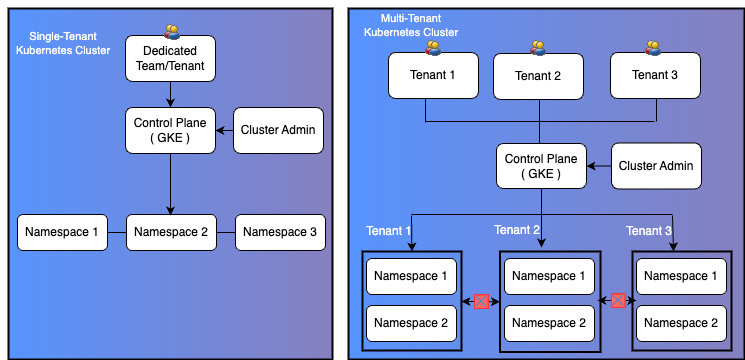

Dedicated clusters vs. multi-tenant clusters

A multi-tenant Kubernetes cluster allows multiple workloads to share a single control plane and set of resources without compromising isolation or security. At LiveRamp, this approach significantly reduced infrastructure costs and operational overhead while enabling teams to move faster through:

- Cost efficiency: Shared control planes and pooled compute reduce idle capacity and duplicated tooling

- Operational simplicity: Fewer clusters to secure and upgrade, with unified logging, monitoring, and alerting

- Faster onboarding: Teams deploy in minutes by creating a namespace, binding RBAC, and applying NetworkPolicies and quotas

- Consistent governance: Centralized policy baselines for pod security, networking, quotas, and IAM

- Better utilization: The scheduler balances uneven load across tenants for higher efficiency

- Platform foundation: Enables self-service workflows, GitOps, and namespace-level cost allocation

The challenge with dedicated Kubernetes clusters

Traditional dedicated cluster architectures introduced several limitations:

- High costs: Each team required its own control plane and worker nodes, leading to substantial infrastructure expenses

- Maintenance overhead: Dedicated clusters demanded specialized maintenance for cluster management, ingress configuration, certificate handling, and security patching

- Resource waste: Underutilized clusters led to inefficient compute allocation

- Operational complexity: Maintaining consistent standards across isolated environments was difficult

The solution

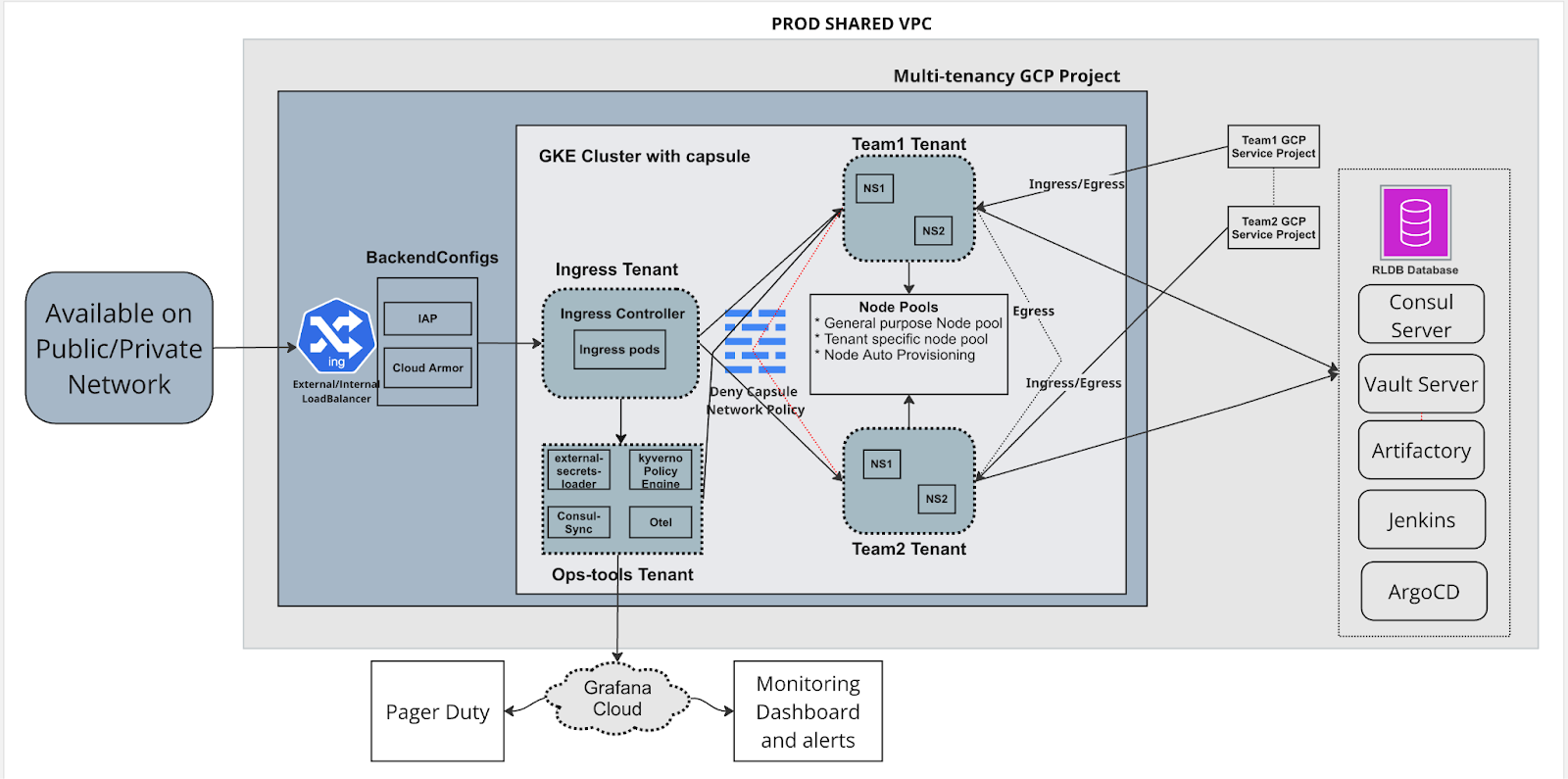

Our MTTN approach consolidates multiple teams and workloads into shared Kubernetes clusters while enforcing strict isolation and security boundaries. The architecture is built on namespace-based isolation, GitOps-driven automation, and defense-in-depth security controls.

We provision foundational infrastructure – projects, networking, and GKE clusters – using Terraform and Atlantis, then layer shared platform components on top.

Multi-tenant cluster diagram

Core components

Our new MTTN implementation leverages several key technologies and architectural patterns, including:

- Namespace-based isolation

- Capsule multi-tenancy controller

- Infrastructure as code

- GitOps deployment pipeline

- Ingress options and traffic isolation

- Security and compliance

- Monitoring and observability

1. Namespace-based isolation

Namespaces serve as the primary isolation boundary, with each tenant receiving dedicated namespaces. This provides:

- Resource isolation via ResourceQuotas

- Network isolation using NetworkPolicies

- Security isolation through RBAC policies

- Separation of secrets and configuration

ResourceQuotas are enforced per namespace to prevent resource exhaustion attacks and ensure fair resource allocation. This prevents one tenant from impacting the performance or availability of others.

2. Capsule multi-tenancy controller

Capsule automates tenant lifecycle management by restricting namespace creation and cross-tenant operations to privileged operators. This ensures strong tenant isolation and reduces the risk of accidental or unauthorized access.

Capsule enables:

- Automated tenant provisioning

- Tenant-level policy enforcement

- Resource quota management

- Clear security boundaries

3. Infrastructure as code

All MTTN clusters are provisioned and managed through Terraform, enabling:

- Automated cluster creation and configuration

- Consistent infrastructure across environments

- Version-controlled infrastructure changes

- Rapid disaster recovery through code-based recreation

4. GitOps deployment pipeline

Our deployment pipeline combines Jenkins with Argo CD to support GitOps workflows:

- Automated Docker image builds and pushes

- Helm chart validation and deployment

- Environment promotion (dev → QA → staging → prod)

- Automated certificate management

- Declarative onboarding and delivery

- Self-service deployments for teams

5. Ingress options and traffic isolation

We support both Nginx and Istio ingress controllers to meet different tenant needs. Each uses class-based routing and strict namespace mapping to maintain isolation.

Before rollout, ingress routes were validated end to end using echo endpoints to verify isolation and reliability across ingress paths.

6. Security and compliance

Our MTTN implementation includes comprehensive security controls:

- Workload Identity: Secure authentication between Kubernetes and Google Cloud services

- External Secrets Operator: Vault-backed secret management

- Kyverno policies: Automated policy enforcement

- Network policies: NetworkPolicies are applied at the namespace level to control traffic flow. By default, these policies prevent pods in one namespace from communicating with pods in another, blocking unauthorized network access between tenants.

- RBAC: Role-based access control with tenant-specific permissions. RBAC policies are scoped to namespaces, so users and service accounts only have permissions within their assigned namespace. Explicit permissions are required for any action, and cross-namespace access is not granted by default.

- Snyk: Kubernetes scanner, monitor, and runtime security

7. Monitoring and observability

Each tenant receives comprehensive monitoring capabilities:

- Grafana dashboards: Customized dashboards for application and infrastructure monitoring

- Alerting: Automated alerts for performance and availability issues

- Cost monitoring: Google Billing dashboard integration for resource usage tracking

- Log aggregation: Grafana loki centralized logging with tenant-specific filtering

Business and technical benefits

- Cost optimization

- Reduced infrastructure costs: Shared resources across tenants reduce per-tenant costs by approximately 60-70%

- Eliminated redundancy: Single cluster maintenance instead of multiple dedicated clusters

- Resource efficiency: Better utilization through shared node pools and autoscaling

- Operational excellence

- Standardized operations: Consistent tooling and processes across all tenants

- Reduced maintenance overhead: Centralized cluster management and updates

- Automated deployments: GitOps-driven deployment pipeline reduces manual intervention

- Disaster recovery: Infrastructure as code (IaC) enables rapid cluster recreation

- Developer experience

- Self-service provisioning: Teams can provision tenants through simple PR workflows

- Rich tooling ecosystem: Access to Grafana, Vault, Consul, and other enterprise tools

- Automated certificate management: SSL/TLS certificates automatically provisioned and rotated

- Generic Helm charts: Pre-configured charts with built-in best practices

- Scalability and performance

- Horizontal scaling: Easy addition of new tenants without infrastructure changes

- Autoscaling: Node Auto Provisioning optimizes resource allocation

- Multi-region support: Global deployment across U.S., EU, and Australia

- Load balancing: Unified ingress controllers handle traffic distribution

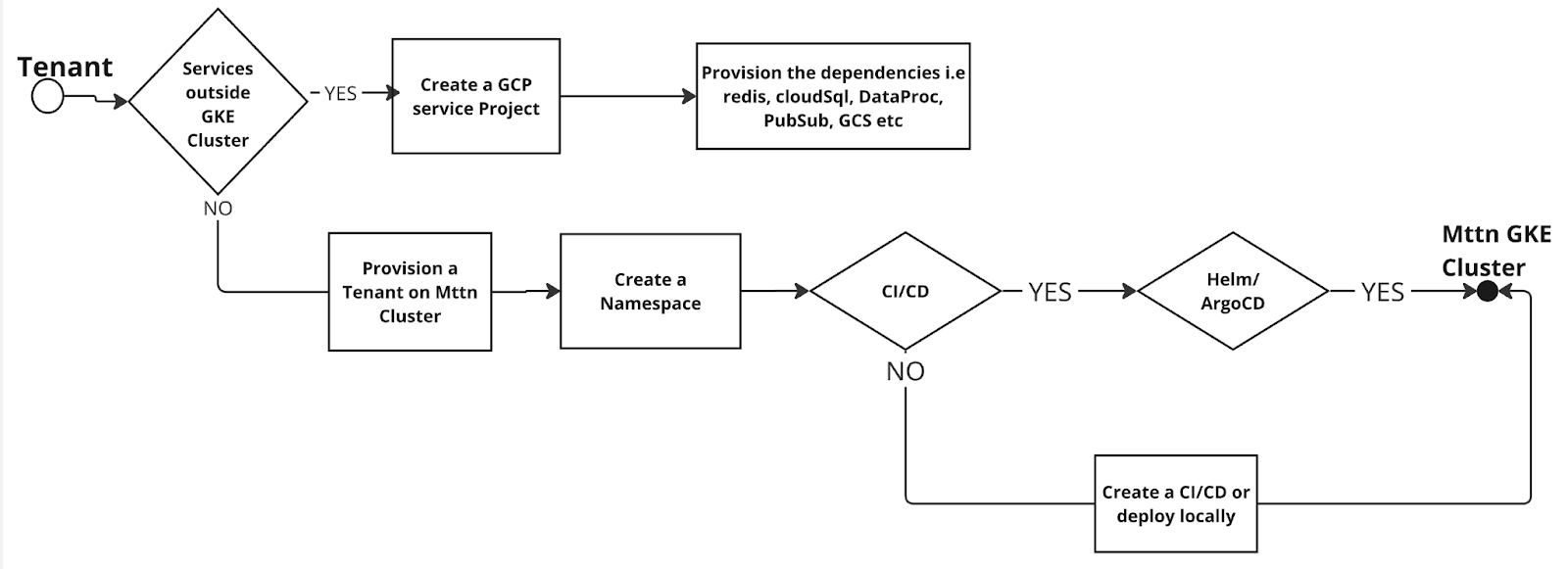

Tenant onboarding process

- Provisioning: DevOps assists in creating a tenant in the required environment (dev/QA/staging/prod) via a PR in the kubernetes-deployments repo.

- Resource quotas: Automatic resource limit assignment based on tenant requirements

- Network policies: Default security policies applied to tenant namespaces

- Namespace creation: The tenant owner or DevOps creates a namespace before application deployment

- CI/CD integration: Applications are deployed using Helm via CI/CD pipelines (Jenkins, ArgoCD)

- Ingress and DNS: PRs are created to add application DNS names and configure ingress

- Security hardening: Workload identity and secret management setup

- Firewall and egress: Egress traffic is blocked by default in production external clusters, but can be allowed for specific services as needed.

- Certificate provisioning: Automatic SSL/TLS certificate management

- Monitoring setup: Grafana dashboards and alerting configuration

Tenant onboarding flowchart:

What do tenants get?

- Unified ingress: Choice of Istio or Nginx for ingress

- Monitoring and logging: Grafana dashboards and alerts, plus resource usage dashboards (Grafana)

- Billing reports: GCP namespace usage billing

- Optimized autoscaling: Node Auto Provisioning for efficient scaling

- Security and maintenance: Cert Manager for DNS certificate automation, Synk for container insights, and all cluster-level maintenance handled by DevOps.

- Lower costs: Shared resources deliver a lower cost per tenant.

- Viewer Access: The eng-all group can be granted read-only access to production MTTN clusters via IAM roles, managed as code (Terraform) for auditability and compliance.

- Documentation: All changes, access lists, and architecture diagrams are documented for compliance and transparency.

Disaster recovery and business continuity

Our MTTN implementation includes comprehensive business continuity and disaster recovery (BCDR) capabilities:

Infrastructure recovery

- Terraform recreation: Complete cluster recreation from IaC

- Automated tooling: DevOps tools automatically reinstalled and configured

- Application redeployment: Tenant applications redeployed through existing CI/CD pipelines

Recovery scenarios

- GCP regional outages: Multi-region deployment provides geographic redundancy

- Human error recovery: Automated rollback capabilities through GitOps

- Security incidents: Isolated tenant environments limit blast radius

Results

The MTTN implementation at LiveRamp marks a major evolution in our infrastructure strategy. By consolidating teams onto shared Kubernetes clusters with strong isolation, we achieved:

- 60–70% infrastructure cost reduction

- Standardized operations across engineering teams

- Improved developer experience through self-service workflows

- Enhanced security via automated policy enforcement

- Better resource utilization through shared infrastructure

This multi-tenant approach has not only reduced costs but also improved our operational efficiency, security, and developer productivity. As LiveRamp continues to scale, MTTN provides a durable foundation for future growth – balancing flexibility, performance, and isolation.

For more technical details and implementation guides, check out LiveRamp’s engineering blogs or reach out to ops@liveramp.com.