The Shift: SQLifying Our System

At LiveRamp, we believe a clean and efficient data ecosystem is the backbone of any successful data-driven enterprise. The mission remains grounded in providing seamless and secure data solutions as we continue to grow and innovate. Recently, our efforts have centered around “SQLifying” our system, a significant shift from the traditional Spark and Hadoop models to a more SQL-based architecture.

This process signifies a major leap in our journey to adopting the modern data stack, and it couldn’t have come at a better time. It also helps us take data movement to a minimum—a decision based on two core principles: efficiency and privacy. Our shift to SQL is thus not just a technological change but a strategic one, enabling us to connect where our customers store their data, their source of truth.

Integrating Snowflake and BigQuery Clean Rooms

One fundamental aspect of our transition involves reducing the extent of federation in our operations. While useful in specific scenarios, Federated SQL query execution necessitates on-the-fly data movement, which we’re striving to minimize. This ambition led us to integrate into Snowflake and BigQuery clean room solutions by leveraging their data-sharing capabilities. We discovered that in scenarios where our customers shared the same cloud and region, we didn’t need to perform federation at all! In addition to our embedded identity resolution solution for Snowflake and BigQuery, this clean room integration helped us to bridge the gap for an end-to-end solution for our customers directly in their data warehouse.

Compute Pushdown Capabilities with Cost-Based Optimizer (CBO)

Another cornerstone of our new SQL-based system is the full “compute” pushdown approach. This pushdown implies that all predicate, aggregation, join, and projection operations run in a single data warehouse, enabling efficient and localized computation. Our customers can share tables into our accounts on cloud providers like Snowflake and BigQuery, create user-defined functions (UDFs) on these tables, and share them with their partners. This flexibility is instrumental in breaking down queries into manageable “silos” or accounts in each cloud provider’s regions.

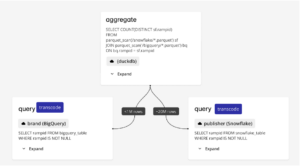

Let’s say that the user executed the following query:

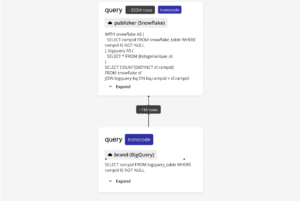

When we execute a query on two tables, one in Snowflake and another in BigQuery, we break down the query into two silos and estimate the amount of data to pull from each side. We only pull the data we need for join, so if you have filters or only reference a single row in the query, we do predicate and projection pushdown, respectively.

Depending on query size and compliance, we adopt a two-pronged approach:

1.Both Snowflake and BigQuery are instructed to write the intermediate query results into our data lake on our cloud provider. The intermediate query results are written directly from Snowflake and BigQuery using native data export features to data lake and we clean up all the assets after the query run. DuckDB’s superior vertical scaling capabilities enable us to effectively perform this final aggregation.

2. If one silo contains significantly less data than the other, we pull data into our data lake from the smaller one and leverage the other silo’s computing power to keep data movement minimal.

We have adopted query caching mechanisms to improve performance and responsiveness, akin to how Snowflake and BigQuery query caching operates. Additionally, we empower our customers with the ability to control cache eviction policies, providing them with greater flexibility and control over their data.

Lastly, we deploy native UDFs to our Snowflake and BigQuery accounts to push down more computing for use cases such as transcoding, ETL, and machine learning with Python. These locally deployed UDFs empower our customers with faster and more reliable operations, contributing to an overall enhanced service experience.

Future Work

In addition to Snowflake and BigQuery, we’re also enabling our clients to connect their data lake with Iceberg tables, a vendor-neutral technology supported by most cloud vendors, including Amazon Web Services (AWS). With Apache Iceberg, we can connect customers’ AWS accounts through their S3 buckets and Google Cloud accounts through Google Cloud Storage (GCS) to query data in their data lake and push down the compute to the cloud data warehouses, also in service of minimizing data movement.

We also do discovery around provisioning cloud resources such as Snowflake warehouses and allocating BigQuery slots to save cloud resources and optimize the query performance. All of this provisioning is done under the hood, so our customers don’t need to worry about the low-level cloud resources we’re using. Another optimization we’re discovering is using the intermediate query caches across queries to minimize the data movement, we first need to make sure that the cache preserves the privacy rules and can only be used by the user who submitted the query.

At LiveRamp, we see these transitions not merely as changes in our technology stack but as opportunities to deliver superior value to our customers. We commit to bringing you a cleaner, faster, and more secure data ecosystem. It’s an exciting time to be a part of the LiveRamp family, and we’re thrilled to have you on this journey with us. Together, let’s shape the future of data.