Filters:

Clear AllFilter Label Tag

.jpg)

February 13, 2026

From On-Prem to Cloud: Building a More Reliable Analytics Platform with Citrix Cloud

Noel Zhang

Engineering & Technology

.jpg)

February 12, 2026

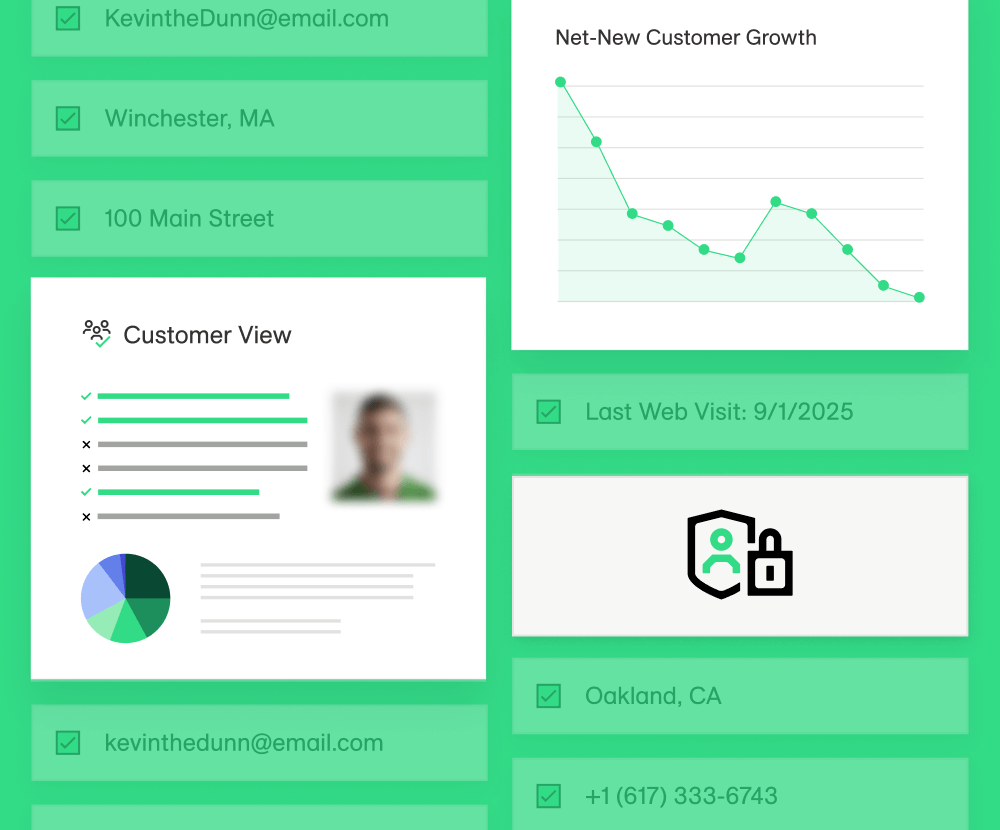

Access Granted: How LiveRamp's Infrastructure Elevated the Customer Experience

AL Victor De Leon

Engineering & Technology

January 9, 2026

7 min read

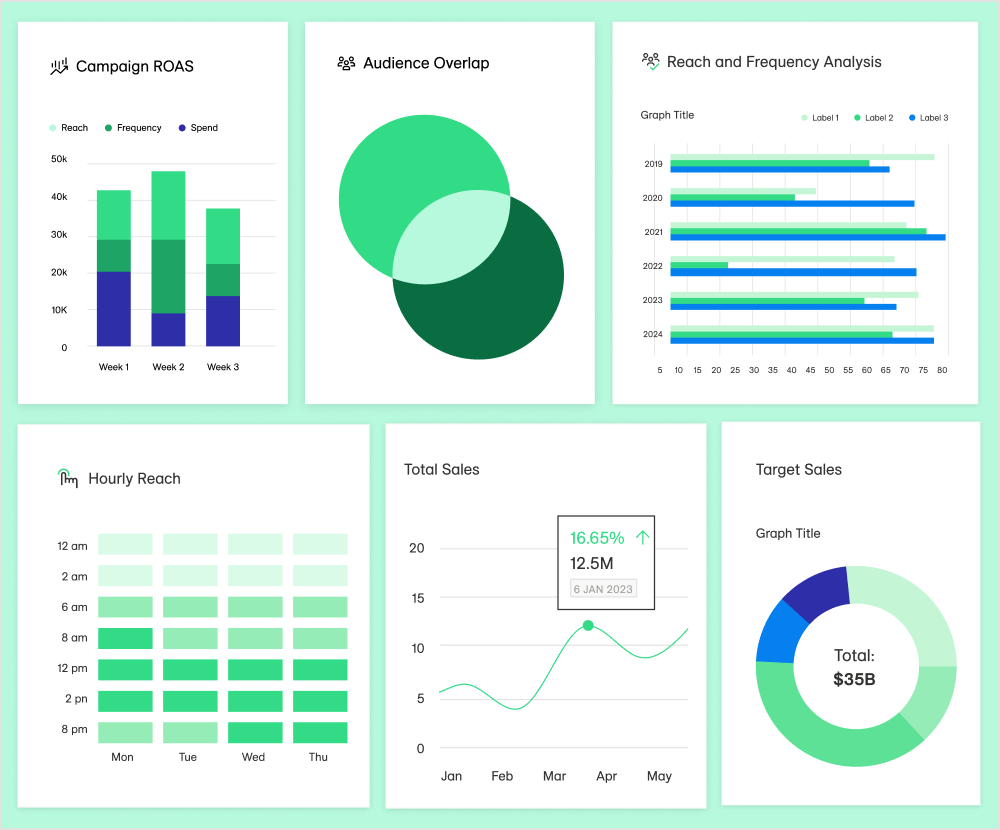

Marketing Measurement Strategy: Optimize Your Media Performance

LiveRamp

No items found.

.jpg)

December 29, 2025

Building a Secure and Scalable Multi-Tenancy Model on GKE

Mahendra Sahu

Engineering & Technology

.png)

December 18, 2025

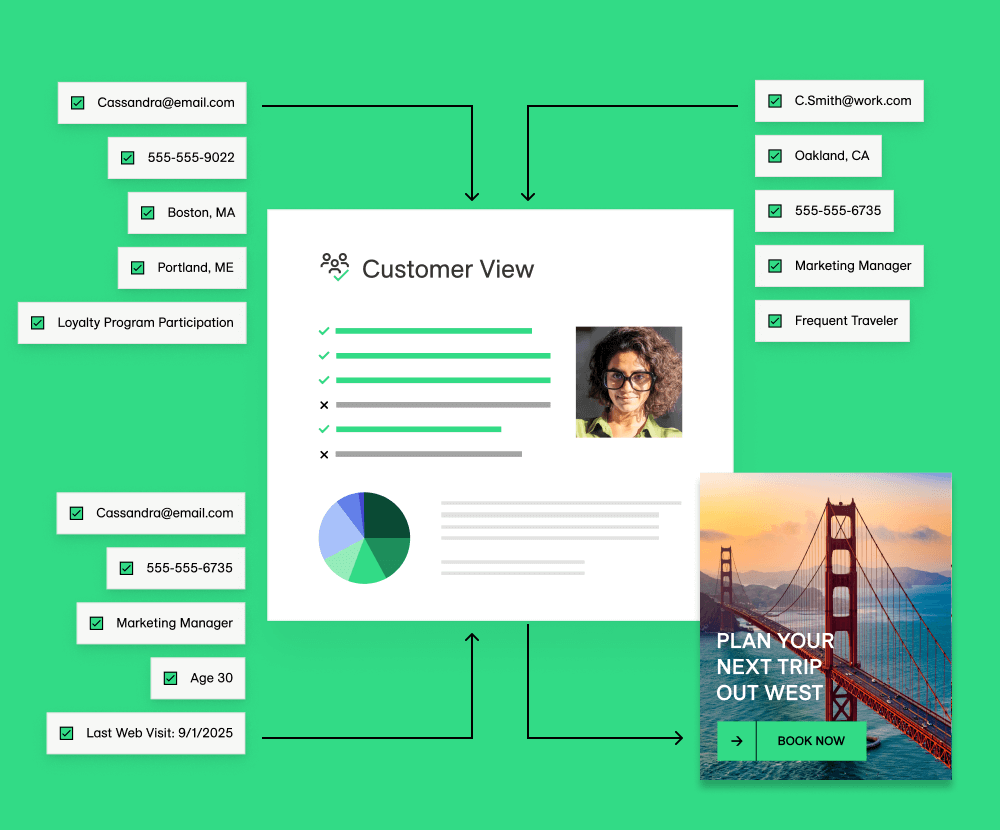

Audience Segmentation with AI: How It Works and Why It Matters

LiveRamp

No items found.

December 11, 2025

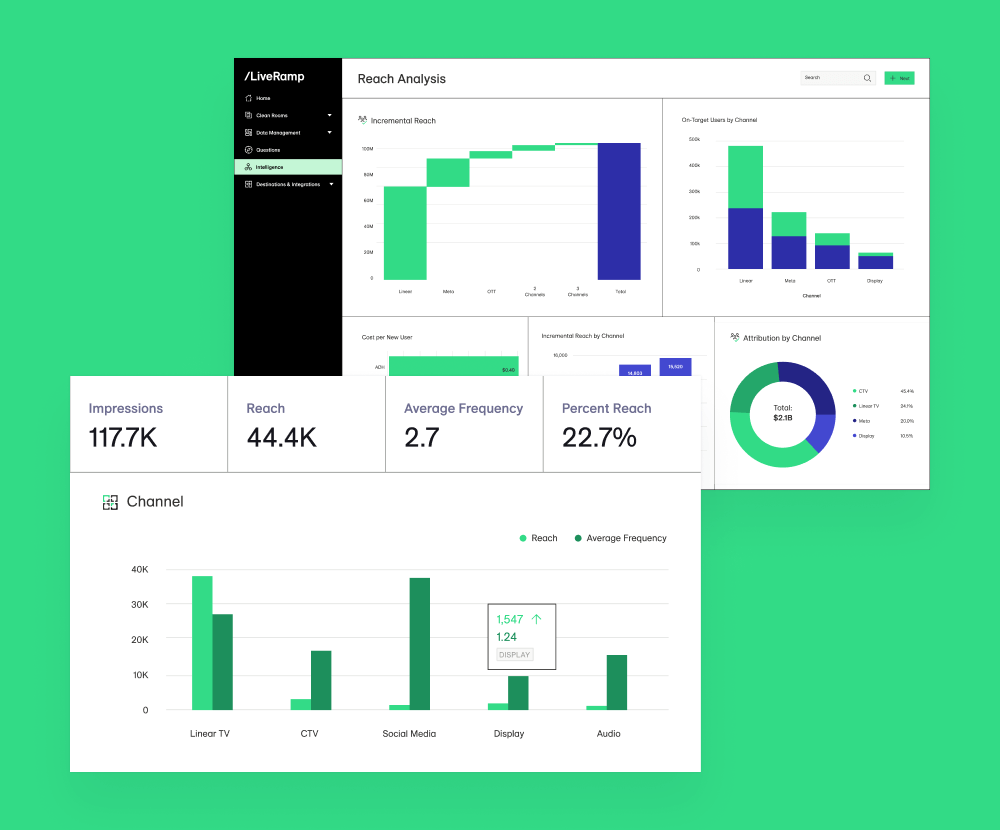

What is Audience Measurement? An Overview of Strategies

LiveRamp

Marketing Measurement

.jpg)

December 8, 2025

From Hackweek to High-Performance: How Real-Time Caching Cut Costs and Improved Speed

Akshat Shah

Engineering & Technology

.jpg)

December 4, 2025

Match-Ready Infrastructure: LiveRamp’s Winning Identity API for the FIFA Club World Cup

Varun Gujarathi & Martin Banson

Data Collaboration

Engineering & Technology

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

Subscribe for Updates

Stay up to date with the latest from LiveRamp.

.jpg)